Introduction – The Human Challenge in an AI-Rich World

In just a few short years, artificial intelligence has begun to reshape how we teach, learn, and lead in education. The last major shift of this magnitude was the rise of the internet and personal computing. But there is an important difference: computers and the web took decades to diffuse through schools. We had time to build infrastructure, pilot initiatives, and gradually move toward one-to-one device programs. Today, it is almost impossible to imagine attending school or going to work without access to a computer and the internet.

AI is following a similar trajectory in terms of inevitability, but on a radically compressed timeline. We did not get several decades to prepare. Generative AI tools appeared in the hands of students, faculty, and staff almost overnight—often embedded inside the platforms we already use. It should not surprise us, then, that many educators and leaders feel caught off guard. The question facing colleges, universities, and K–12 systems is no longer if AI should be used in teaching and learning, but how it should be used, by whom, and under what conditions.

That “how” matters because education is not the same as the workplace. Employers can typically assume that employees already possess foundational knowledge and skills; AI is used primarily to increase efficiency or creativity. In the classroom, our charge is different. We are responsible for ensuring that learners develop knowledge, skills, and judgment. If students outsource too much thinking to AI, they may produce polished work without building the underlying understanding. The challenge is not simply to let students use AI, but to help them use it in ways that enhance, rather than replace, human creativity and critical thinking.

In my earlier work with the TrAIT framework (Transparency in AI and Teaching), I focused on how to clearly communicate when AI is allowed, restricted, or prohibited, and how students should disclose their AI use. TrAIT gives learners and instructors the “rules of the game” around permissibility and transparency.

The next step is to answer a more practical question: When AI is allowed, how should we use it? That is where the AI Sandwich: Human-First, Human-Last in an AI-Rich Educational Environment comes in. The AI Sandwich offers a simple, memorable structure for keeping human thinking before and after AI assistance. Instead of placing AI at the center of learning, the AI Sandwich asks learners—and the educators who support them—to begin with their own ideas, questions, and attempts, then invite AI in as a partner, and finally return to human judgment to refine, verify, and personalize the results. In other words: think first, collaborate with AI second, and think again at the end.

The AI Sandwich is designed to be usable across the entire educational ecosystem. Institutional leaders can use it to frame policies and professional expectations for responsible AI adoption. Faculty and instructional designers can apply it to lesson planning, assignments, and assessment so that AI supports, rather than erodes, student learning. Staff can leverage AI within this structure to make their work more efficient and student-centered, while still exercising professional judgment. And students can use the AI Sandwich as a guide for protecting their own voice and ensuring that AI does not short-circuit the learning process.

Why Human-First, Human-Last Matters

Long before AI entered the picture, we were already outsourcing pieces of our thinking to technology. Many of us can remember a time when we knew dozens of phone numbers by heart. Today, most people can recite only a handful—if any—because smartphones remember them for us. It is not that we lost the ability to memorize numbers; we simply stopped investing mental effort there because a tool took over the job.

That trade-off is acceptable for phone numbers. It is far more consequential when the thing we are outsourcing is thinking itself—our creativity, our critical judgment, our ability to wrestle with complex ideas. When learners turn to AI too early, especially at the start of a task, they risk letting the tool define the boundaries of their thinking. Generative AI often produces a polished, “cookie-cutter” response: plausible, organized, and confident. Once a human sees that answer, it becomes cognitively difficult to imagine alternatives. The first answer becomes the anchor.

Human-first work interrupts that pattern. When individuals or teams begin by brainstorming, sketching ideas, or attempting solutions before consulting AI, they keep those neural pathways for creativity and critical thinking active. They generate original starting points that reflect their prior knowledge, context, and lived experiences. Only after this human work is on the table do they invite AI into the conversation—asking it to extend, critique, clarify, or reorganize what they already have. AI becomes a co-thinker rather than the architect of the entire response.

Equally important is the “human-last” phase. After AI has generated suggestions, examples, or explanations, learners must re-engage their own judgment. They decide what to keep, what to discard, what needs to be rewritten in their own voice, and what should be fact-checked or cross-referenced with course materials. This final human pass is where accountability, ethics, and learning converge. It is also where we ensure that AI is enhancing understanding rather than silently replacing it.

This human-first, human-last pattern has particular power for students from under-resourced schools, or first-generation backgrounds —AI can act as an always-available tutor that works at their pace. When framed as a co-thinker, AI can help them catch up on background knowledge, experiment with examples, and get formative feedback they might not otherwise receive. The goal is not to deny these students the benefits of AI, but to help them use it in ways that amplify their learning rather than mask gaps in understanding.

The AI Sandwich Explained

In my earlier TrAIT framework, the emphasis was on clarity and disclosure: clearly signaling when AI is prohibited, when it is allowed in limited ways, and when it can be used more expansively. The AI Sandwich is the natural next step. Once we know whether AI is allowed, we still have to answer how to use it so that it supports learning rather than replaces it.

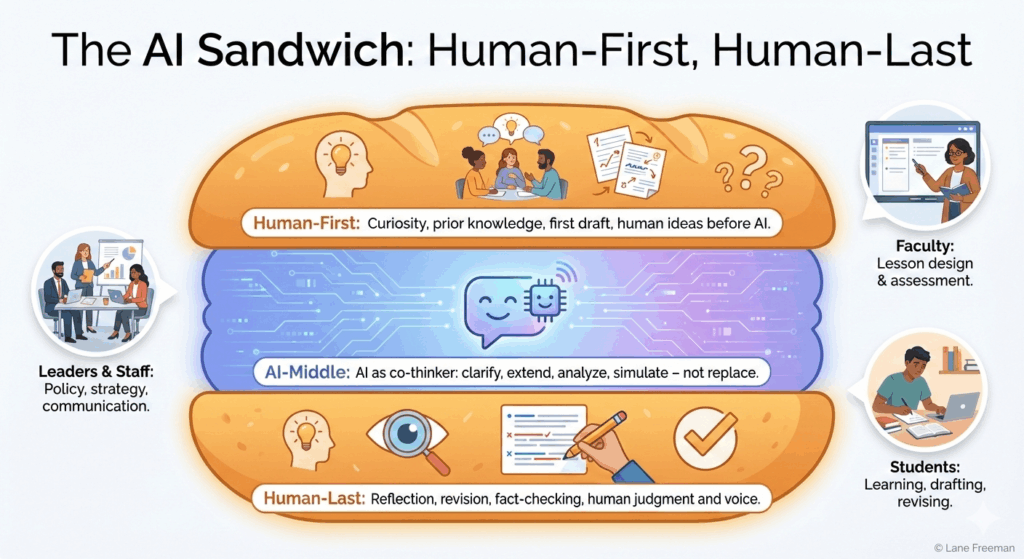

The AI Sandwich offers a simple mental model:

- Human-First – Think, plan, and create from your own mind.

- AI-Middle – Invite AI in as a partner to clarify, extend, analyze, or simulate.

- Human-Last – Return to your own judgment to refine, personalize, and decide what to keep.

Image description: A graphic visualization of the AI Sandwich concept, represented as a stylized sub sandwich. The top “bun” of the sandwich is labeled “Human-First: Curiosity, prior knowledge, first draft, human ideas before AI” with icons representing thought, collaboration, and brainstorming. The midsection “filling” of the sandwich is labeled “AI Middle: AI as co-thinker: clarify, extend, analyze, simulate – not replace” with an icon of a stylized chat bubble and computer chip. The bottom “bun” of the sandwich is labeled “Human-Last: reflection, revision, fact-checking, human judgement, and voice” with icons representing thought, investigation, and manual revision. Key players in the AI sandwich are labeled to the sides of the sandwich: leaders and staff, responsible for policy, strategy, and communication; faculty, responsible for lesson design and assessment; and students, responsible for learning, drafting, and revising.

Visually, you can imagine the human work as the top and bottom bun of a sandwich, and AI as the filling. The bread holds everything together; without it, the sandwich falls apart. Crucially, the human decides how thick each layer should be. Sometimes the top bun (human-first) is substantial and the AI layer is thin. In other situations—especially when staff or leaders already know the content deeply—AI might do more of the drafting, while the human still provides the final, decisive bottom bun.

Human-First: Thought Before Automation

Human-first means we start with curiosity, prior knowledge, and our own ideas before we ever open an AI tool. This step keeps the “thinking muscles” active and gives us a baseline to work from.

For students, human-first might look like:

- Brainstorming independently on paper or in a notebook.

- Doing a quickwrite about what they already know or wonder about a topic.

- Working in small groups to list questions, predictions, or possible approaches.

- Attempting a first draft or first solution set without AI, even if the spelling, grammar, or math is messy.

That first draft is about capturing the student’s voice and mental model so instructors can see where they’re starting from and where the misunderstandings are.

For faculty, human-first means remembering that AI does not know their students, their campus culture, or their goals as well as they do. Before turning to AI for help, instructors might:

- Sketch learning outcomes and big ideas for a unit.

- Identify the specific students in front of them—strengths, barriers, and needs.

- Choose a few instructional strategies they already know work with this group.

Then, when they go to AI, they’re not asking, “What should I teach?” They’re asking, “Here’s who I’m teaching and what I’m trying to do—what might I be missing?”

For staff and leaders, human-first can be as simple as:

- Jotting down key points of a strategic plan they already know well.

- Listing the questions they want a report or communication to answer.

- Clarifying the decision they’re trying to make.

In each case, the human sets the direction; AI arrives later as a helper, not the driver.

AI-Middle: Partnering, Not Delegating

The middle of the sandwich is where AI belongs: after humans have contributed initial thinking, but before they finalize or publish anything. The key is to treat AI as a co-thinker, not a substitute.

In this phase, we use AI to:

- Clarify – Explain concepts more simply or in different ways.

- Extend – Add examples, counterarguments, or additional perspectives.

- Analyze – Point out gaps, patterns, or inconsistencies in what we already produced.

- Simulate – Role-play stakeholders, generate alternative scenarios, or draft potential responses.

For students, a powerful pattern is:

- Write a first draft without AI.

- Paste that draft into an AI tool and ask for feedback in bullet points, not rewritten paragraphs.

- Use those bullets as a checklist to revise in their own words.

The choice to request bullets instead of full paragraphs matters. Once students see a polished AI-written paragraph, it’s cognitively hard not to copy its structure and language. Bullets, on the other hand, prompt students to rethink, rephrase, and truly re-own their work.

For faculty, AI-middle might look like:

- Asking AI to propose several ways to sequence activities for a class session they’ve already outlined.

- Requesting sample discussion questions or formative checks aligned to outcomes they’ve already defined.

- Getting pacing ideas or suggestions for differentiating a lesson for varied readiness levels.

Here, AI is helping with options and efficiency, but the instructor still chooses what fits their students and context.

For staff and leaders, AI-middle can be much heavier when they already know the content deeply:

- Summarizing a long report into an executive brief.

- Turning meeting notes into a draft message or presentation.

- Generating alternative wording aligned to existing strategic priorities.

There isn’t a universal “50% AI / 50% human” rule. If someone is new to the content (like a student learning a concept for the first time), the human-first and human-last phases should dominate. If a staff member knows a process or plan inside and out, AI can reasonably carry more of the drafting load—because the human is still fully qualified to review and correct it.

Human-Last: Reflection and Refinement

The bottom bun of the sandwich brings everything back to human judgment. After AI has done its part, we still need a human being to review, reflect, and refine.

For students, human-last means:

- Revising their work based on AI’s bulleted feedback, but using their own language and examples.

- Reading their work aloud to check if it still sounds like them.

- Reflecting briefly: What did AI help me understand or notice that I missed before? What is my takeaway?

For faculty, human-last might involve:

- Reviewing an AI-suggested lesson outline and customizing it for their actual students.

- Removing activities that don’t fit the course context or time constraints.

- Noting what worked in class and making adjustments for next time—sometimes with AI’s help, but always with human professional judgment in the lead.

For staff and leaders, human-last is the safeguard that ensures AI’s efficiency doesn’t override mission or values:

- Checking that AI-generated summaries accurately reflect the nuance of a strategic plan.

- Aligning messaging with institutional priorities, equity commitments, and local realities.

- Editing for tone, ethics, and clarity before anything goes out the door.

Across all these roles, one principle holds: no AI output leaves the “kitchen floor” without a human final pass. The AI Sandwich offers a visual reminder of that commitment. By keeping human thinking at both the beginning and the end, and AI squarely in the middle as a collaborator, we can utilize powerful tools without outsourcing the very learning, judgment, and creativity education exists to develop.

Conclusion: Centering Humanity in an AI-Driven Era

Artificial intelligence holds enormous promise in education, but that promise is only realized when humans remain the architects of meaning. AI can suggest ingredients, but it cannot decide what kind of sandwich we need. In the AI Sandwich model, the human is the one who assembles it: choosing how big the top bun should be, how thick the filling needs to be, and how substantial the bottom bun must be for the task at hand. Sometimes the “human-first” layer will be extensive and the AI contribution small; sometimes AI can shoulder more of the drafting work because the human already knows the content deeply. The point is not to reach a perfect ratio, but to keep humans in charge of that decision.

Seen this way, the AI Sandwich is a cultural mindset for an AI-rich educational environment. We still need human curiosity to ignite the process, to ask the first questions, to sketch the messy draft, to think outside the box. We need learners, faculty, staff, and leaders to keep exercising the parts of their brains that generate original ideas and personal voice. AI can then amplify that work: turning brainstorms into polished documents, transforming outlines into presentations, generating variations on examples, or accelerating the busywork that is not central to the learning outcome. In many cases, the slide deck or formatted report is not where the learning lives; it is simply the vehicle. AI can help build the vehicle while humans focus on the ideas it carries.

The bottom bun, the human-last phase, is where judgment completes the process. No matter how strong the AI support in the middle, a human still has to read, revise, and decide. Students must ensure the final product reflects their understanding and their voice. Faculty must align AI-assisted lesson ideas with what they know about their students and context. Staff and leaders must check that AI-generated summaries, plans, or communications align with institutional mission, values, and commitments.

This human-first, human-last mindset applies across the institution, but it intersects especially strongly with transparency. The TrAIT framework focused on clearly signaling when AI is allowed, restricted, or prohibited and why those boundaries exist. The AI Sandwich extends that work into the how. When we explain to students why some tasks ask them not to use AI at all, why others invite AI only after a human first draft, and why some activities allow heavier AI involvement, we are not just enforcing rules—we are teaching metacognition. We are helping them understand how AI can either undermine or enhance learning, depending on when it is used.

When students understand the “why,” they are far more likely to buy into the “how.” The AI Sandwich gives them a clear mental picture to carry forward:

If we can embed that pattern into the daily habits of leaders, faculty, staff, and students, we can move into an AI-driven era without surrendering what makes education fundamentally human.

Author’s Transparency Note on AI Use in This Article

Transparency note: The AI Sandwich concept is Dr. Lane Freeman’s original idea, developed over multiple presentations with educators. For this article, he used a generative AI assistant as a co-writer across about multiple prompts to help organize sections, refine wording, and expand examples. He then carefully reviewed, revised, and fact-checked the draft so the final structure, arguments, and responsibility for its content remain fully his.

Dr. Lane Freeman is the State Director of Online Learning for the North Carolina Community College System and a national leader in AI integration in education. He has trained thousands of educators across the country on responsible AI adoption, innovative instructional design, and future-ready teaching practices. His work includes the TrAIT Framework and the statewide NC3MI Master Instructor Program.